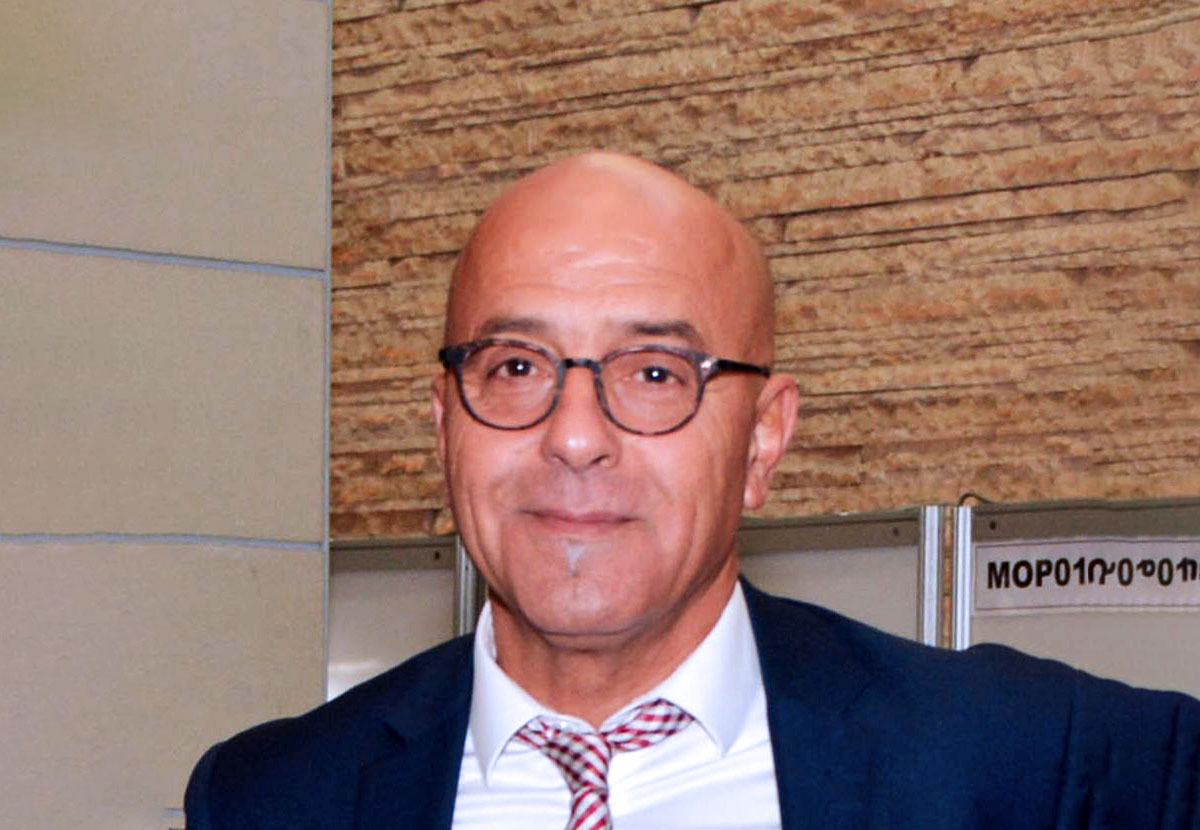

Interview with Marco Aldinucci, coordinator of the High-Performance Center for Artificial Intelligence (HPC4AI) at the University of Turin and co-leader of Spoke 1 of ICSC, dedicated to High Performance Computing (HPC) and Big Data

What are we talking about when we refer to HPC?

High Performance Computing means using extremely high computing power to solve a problem faster or to solve a bigger problem than the initial one in the same amount of time. In the first case, HPC comes into play for solving scientific or industrial problems where the value of the information degrades over time. Examples include weather forecasting or simulations of natural phenomena that trigger operational scenarios, pharmaceutical chemistry, and materials science, where the computational complexity is enormous and the analysis needs to be completed in a reasonable time. In the second case, HPC allows to solve problems where the computational grid becomes denser, increasing the number of calculations and the size of the problem. Staying with the example of weather forecasting, I might need to move from a 1 km by 1 km grid to a 100-meter by 100-meter or even a 1-meter by 1-meter grid, for instance to predict a flash flood or to determine whether a self-driving car will encounter ice at a specific intersection. Or, looking at Artificial Intelligence (AI), I might want to increase the number of parameters in my Large Language Model (LLM) to make it capable of addressing more complex tasks, moving from translating a text to reasoning, or understanding irony. To do this, I will need a larger model: I’ll have to go from 7 to 70, or even 700 billion parameters, which means a matrix with 700 billion cells, a nonlinear increase in computation time (the complexity of the basic operation, multiplying dense matrices, is cubic), and a multiplication of the space occupied. While in fact for a standard computer, a laptop, the power is achieved through miniaturisation, in the case of a computer that is a million times more powerful than a laptop, we do not have the technology to miniaturise a million times further. So, to solve this problem, we place many systems side by side, covering an area as large as a soccer field. Sometimes, jokingly, when someone asks me what HPC is, I say: “If you can see it from a satellite, it’s HPC,” but I could also say, “If it consumes more than 1 megawatt, it’s HPC.” In short, it’s a big deal, and an energy-hungry one too.

And what do we mean when we talk about Big Data?

I would start with what they are not, namely Big Data does not just mean “lots of data”. Big Data refers to the process of collecting, managing, and extracting knowledge from datasets characterised by the so-called “5 Vs” (Volume, Velocity, Variety, Veracity, and Value) using distributed algorithms; these are tasks that would be impossible to carry out using traditional analytical methods and non-scalable computing systems. Of course, there’s the issue of quantity, because the more data I have, the more likely I am to extract useful knowledge, but it’s also about how that knowledge is extracted. There’s a well-known legend, never empirically proven, often used as an anecdote to illustrate the kind of hidden correlations Big Data can reveal: you’re shopping and the supermarket offers you a loyalty card to access discounts. In return, it tracks your purchasing habits, profiles you, and uses that profile to organise product placement on shelves. It ends up placing beer next to diapers. Clearly, babies don’t drink beer, but adults with babies go out less, and so they buy beer to drink at home. This kind of correlation, which would be hard to uncover using traditional statistics since it links seemingly unrelated items, is precisely what Big Data is about. Today, modern AIs — neural networks, machine learning (ML) — integrate well with Big Data techniques and enhance their effectiveness for tasks like classification, segmentation, and clustering of text and images. The two areas have started to merge since around 2017; this was the moment we moved from designing AI solutions for specific Big Data problems to more general-purpose, universal models capable of performing a variety of tasks. This led to the affirmation of so-called foundational AI models, Large Language Models like ChatGPT, specialised both in statistical tasks and generative tasks, therefore more powerful than traditional Big Data algorithms.

In this vast landscape, where does Spoke 1 of ICSC, which you co-lead, fit in?

So, ICSC is composed of 10 Spokes. Eight focus on computing applications, ranging from high-energy physics to medicine, while two — called technological or methodological Spokes — focus on computing as a tool: traditional computing in the case of Spoke 1, and quantum computing in the case of Spoke 10. Spoke 1, specifically, works to optimise the computing tools needed to design and run applications, both on the hardware side (like processors, systems, machines, memory) and the software side (low-level software such as drivers and operating systems, development tools like compilers and libraries, and tools for application design). This approach, known as co-design — introduced by the U.S. more than 10 years ago within the Exascale Computing Program — allows working without the software having to chase the hardware, or vice versa, but it is limited to producing prototypes, which do not always lead to industrial follow-ups. Turning research into industrial innovation means giving companies the opportunity to preserve their investments over time without needing to redesign their solutions with every technological advancement. As part of Spoke 1, we’re working toward this goal by adopting specific methods and technologies that ensure portability of applications across different generations of supercomputers, and that help reduce development time and costs and optimise applications.

Has Spoke 1 already produced prototypes that have had, or are having, industrial impact?

Spoke 1 has produced the first-ever version of Pytorch for RISC-V. Pytorch is by far the most widely used programming framework for AI, and RISC-V is a rising star in the processor world. RISC-V is a free, modular, and extensible hardware architecture for processors, designed to be simple, efficient, and suitable for a wide range of applications, from microcontrollers to supercomputers. Its extensibility allows the production of effective and energy-efficient processors even for applications of great commercial interest, such as inference in AI models. Independence from licenses that protect the machine language makes it suitable to face the challenges of technological sovereignty (for example, in Europe and China): anyone who wants to — if capable — can develop their own RISC-V processor without needing a license from AMD or ARM; a license that is often denied for geopolitical reasons. For Pytorch, we started from experimental hardware to reach an experimental software, but it would also be important to be able to do the opposite, moving from experimental software to experimental hardware. This is more complex because testing with hardware has very different costs, but we have obtained funding to do this, thanks to the European project DARE (Digital Autonomy with RISC-V in Europe) worth 240 million euros. Under the coordination of ICSC, several Italian universities and research institutions, including INFN, are participating in the project with the goal of designing new processors, also leveraging knowledge of software that runs on current processors.

What’s the advantage of going from software to hardware?

By proceeding in this direction, it’s possible to design the hardware so that software runs more efficiently, consumes less energy, or both. For example, processors used in scientific computing typically perform calculations at 64-bit precision, sometimes 32-bit. AI applications today, however, can carry out computations using 16-bit, 8 bit, or even 4-bit precision. By specialising the hardware for low-precision inference tasks in AI, and so reducing precision from 64 bits to 4 bits, energy consumption and speed are reduced by a huge factor, achieving a performance gain of over 200 times with the same number of transistors. From a methodological perspective, the goal is to move from a general-purpose computing device to a collection of units each specialised for a specific type of computation. Spoke 1, as part of the DARE project, uses chiplet technology for this purpose, which replaces a single large chip with many smaller chips, each designed and built separately with different functions, and then packaged together. Each chiplet activates efficiently and quickly when needed and remains off, without consuming power, when its function isn’t required. Power consumption is a huge issue in computing because electricity becomes heat as a function of frequency: the faster you want to go, the higher the frequency, and the more heat is generated, which we can’t effectively dissipate. Today, an AI chip consumes around 1 kW of power, and its heat density ranges between 70 and 80 watts per square centimeter, while a nuclear reactor cores reach about 110 watts per square centimeter (with fuel cores in PWR reactors ranging from 80 to 150 W/cm²). All the studies we have indicate a constant increase, so we imagine we may reach 5 kW per chip within a few years. If we don’t solve the heat dissipation problem, these devices will begin to deteriorate and malfunction.

Besides specialisation into subcomponents, are you testing any other strategies for heat dissipation?

We’re testing several, all with the same goal: efficiently removing large amounts of heat from a small surface area. Air cooling is not sufficient today, because it doesn’t provide enough thermal exchange. Instead, the most commonly used technology is water cooling: water flows through tubes embedded in a plate, that is in contact with the processor and carries away the heat through direct contact. This strategy works, but it has its limits. The more heat there is to dissipate, the greater the amount of water that needs to flow through the pipes, which increases the pressure, raises the risk of the fluid becoming turbulent, and requires highly durable, aerospace-grade pipes. Should one of the pipes then break, the water would end up where the electricity runs, and that is a very high risk to face. So we’ve tested another solution, which consist in using evaporation instead of contact. A fluid — insulating, dielectric, and harmless — that passes over the heat source and evaporates. Evaporation is far more efficient than contact cooling for heat dissipation and, in addition, it doesn’t endanger the circuits if a pipe breaks. Also, the pressure involved is much lower: a cheap pump is enough to keep the fluid circulating. This mechanism, called two-phase cooling (because the liquid changes phase twice, from liquid to gas, and then it’s recondensed), is about forty times more efficient than air. Of course, it’s not forty times more efficient than water, since water is already quite efficient, but it’s an interesting sperimentation, even from a system design perspective. Another possibility is the ammonia cycle, based on adsorption. Here, heat is used to trigger a chemical compound to crystallise. The crystallisation process absorbs heat, thereby lowering the temperature. Right now, as University of Turin, in collaboration with Spoke 1, we are working on broader experiments. We’re building a new data center in the campus of the university, where we aim to experiment with renewable energy power supply systems integrated with hydrogen storage. Hydrogen systems work like a battery: when there’s excess energy, they convert water into hydrogen, and when energy is needed, they convert hydrogen back into electricity. We know hydrogen has lower efficiency than chemical batteries, but it also offers certain advantages, and no one has yet tested it on data centers.

How does Italy position itself compared to the rest of Europe in this field?

Italy is certainly among the leading countries dedicating efforts to these technologies, but science needs to gain the support of industry. We’re full of stories where Italian research didn’t find industrial follow-up and, curiously, even though the inventors of many technologies were Italian, we own none of it. The microprocessor, for example, was invented by Federico Faggin from Vicenza, and the two companies in the world that provide tools to design nearly 100% of processors were both founded by Alberto Sangiovanni Vincentelli, Milanese in California. Today, with the DARE project — which is receiving financial backing from a European company — we have a new opportunity: to establish in Europe a supply chain for the design and production of high-performance processors, which is currently lacking and which absence is forcing us to rely on the United States. Compared to U.S. technologies, RISC-V offers a major advantage: it was created as an open-source architecture, and not only allows users to use it freely, but also gives them the ability to contribute to its design by adding specific instructions tailored to their needs. This opens the door to investment, as it no longer requires an insurmountable financial commitment. Then there’s Leonardo, the Cineca supercomputer hosted at the DAMA Technopole in Bologna, that it’s not the most powerful computing machine in Europe, but it is the most in-demand among research groups. Why? Because Spoke 1 doesn’t just design processors, it provides users with a fully functioning ecosystem of services to use them, along with automation systems that make their lives easier. So, in answer to your question about whether Italy is active and effective in this field, yes, we are effective, as confirmed by usage data on Leonardo. We’re only missing industrial deployment, which we’re currently working on by aiming to make HPC applications easier to develop and more durable over time — and therefore less expensive.

What is in the future of supercomputing in Italy?

There is the hope of investing independently in the software side, and collaborate with other European players on hardware, ideally with the support of a Europe-wide policy that encourages investments in this direction. But starting from software is essential. Imagine switching phones and picking one with a different processor. Do you notice? Not really. But when you turn it on and realise it comes with a whole new, hard-to-use digital ecosystem or lacks the features you’re used to, you probably regret it and want to switch back. In AI and scientific computing field, for example, the so-called GPUs have been the breakthrough technology in AI revolution, because they are accelerators designed to perform a very specific problem, the high-density numerical calculations, typically linear algebra. NVIDIA is not the only company making GPUs — Intel and AMD do too — but everyone turns to NVIDIA because they invested from the beginning in making their products easy to use and well-integrated into software ecosystems. Therefore, these are the models we should look to in the immediate future, especially with a view toward long-term goals: achieving European technological sovereignty in processor development and improving energy efficiency. Energy is the next big challenge. We have the opportunity in this field to make a step forward, especially in light of recent developments in the U.S., where the government has shut down all energy-efficiency-related projects and funding. The University of Turin is already very active in this area. Thanks to a €5 million investment, its HPC4AI data center has reached 92% energy efficiency — compared to a national average of 65% — and was rewarded a few years ago as one of Italy’s greenest data centers. Of course, the goal is to go even further, getting close to 100% efficiency, and with the redesign of the center, we’re focusing on energy aspects at multiple levels. From an architectural standpoint, for instance, we’re focusing on recycled and reused materials, rather than their disposal, and more broadly, we want to be able to measure the global impact of the structure and its operations. We need to quantify our ecological footprint, so that we can set realistic yet ambitious goals for improvement.

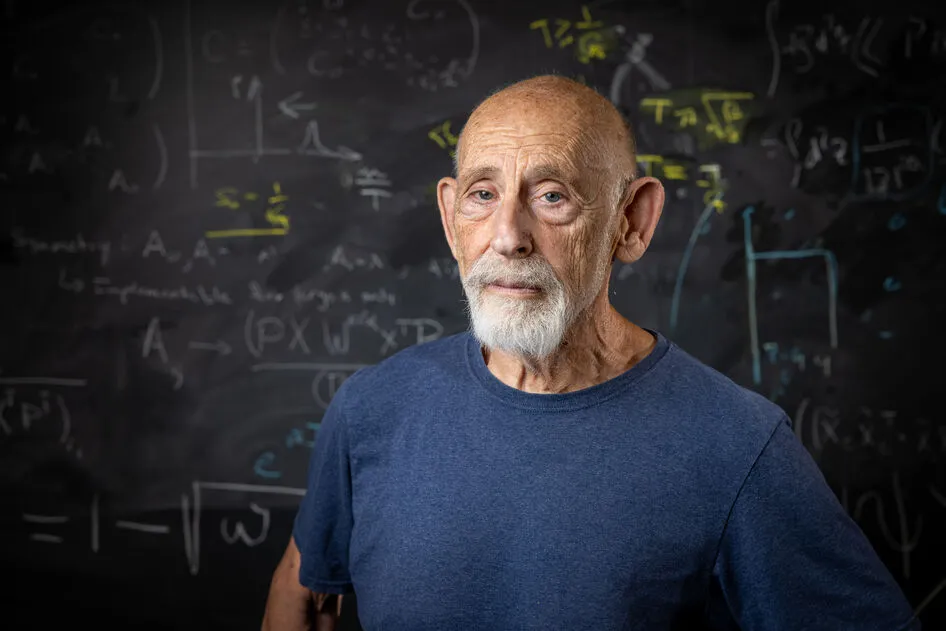

BIO

Marco Aldinucci is professor of Computer Science and coordinator of the Parallel Computing research group at the University of Turin. He founded the HPC4AI@UNITO laboratory and the national HPC laboratory of the CINI consortium of which he is director. He is co-leader of Spoke 1 of the National Center for Research in High Performance Computing, Big Data and Quantum Computing (ICSC), dedicated to developing highly innovative hardware and software technologies for future supercomputers and computing systems.