BIG DATA, COMPUTING AND QUANTUM COMPUTING

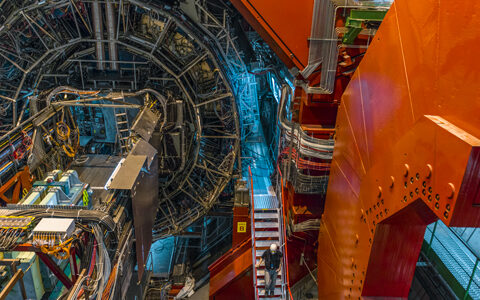

Big science experiments are a fundamental part of contemporary physics research, from high-energy physics to astronomy to astroparticle physics. These experiments, among other things, are characterised by the production of an enormous amount of data, which must be archived, analysed, interpreted, and shared. This is the big data and digitalisation revolution, whose impact, of course, goes far beyond the world of physics and science. It is now present in almost every sector of society. The management of big data imposes the necessity of having significant computing resources, in addition to innovative technological solutions and more and more advanced IT resources. And thus supercomputers, advanced numerical simulations, and cutting-edge network infrastructure have recently become essential components for physics research, in the wake of faster and faster technological progress. Not to mention another revolution already underway, that of machine learning and artificial intelligence, whose applications are already widely exploited in research and promise to be even more so in the future.

And speaking of the future, a revolution with a potentially even greater impact (though distant in time) is the development of quantum computers, whose most interesting applications could concern scientific research and, in particular, physics research.