n.8 | November 2025

n.8 | November 2025

Compliant, specialised, ethical: the next

Italian AI

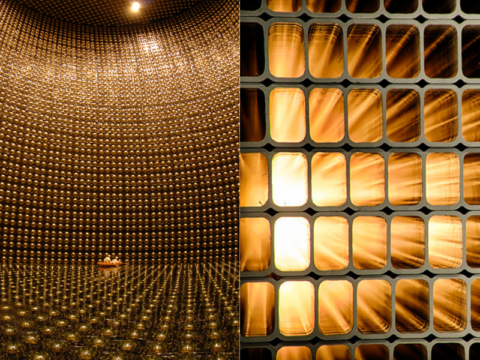

Prompting the Real. Artists–AI Co-creation: this is the title of the exhibition that will light up Palazzo Poggi in Bologna on 15 and 16 November. A valuable opportunity to overturn an action that has now become part of everyday life: the formulation of a prompt, a command that guides the generation by an AI. What happens if, on the contrary, it is the AI that gives an input to the artist within a creative process? Does it open up new scenarios? Or does it reproduce mechanisms from which we would like to free ourselves? The exhibition explores the potential and the limits of this co-creation, a tension between possibility and risk that runs through all fields of artificial intelligence – from creativity to the economy, from urban planning to scientific research. In all these contexts, AI clashes with unpredictable human and social dynamics, nuances of behaviour that are difficult to grasp, complex and layered decision-making levels, and if the models that guide it are not built in a fair, inclusive and safe way, it will hardly be able to produce results that meet expectations. We discussed its pervasiveness, the elaboration of non-stereotyped outputs, and ethical and sustainable models with Michela Milano, director of the centre that conceived Prompting the Real, the Alma Mater Research Institute on Human-Centred Artificial Intelligence (ALMA AI), in collaboration with the National Research Centre in HPC, Big Data and Quantum Computing (ICSC) and the National Institute for Nuclear Physics (INFN), and under the patronage of the FAIR Foundation – Future Artificial Intelligence Research.

Michela Milano is Professor at the University of Bologna, where she directs the Alma Mater Research Institute on Human-Centred Artificial Intelligence (ALMA AI) Interdepartmental Centre. She also directs the Digital Societies centre at FBK Fondazione Bruno Kessler, and has been Vice-President of the European Association on Artificial Intelligence and Executive Advisor to the Association for the Advancement of Artificial Intelligence. She was part of the group of experts that drafted the national strategy on AI and is a member of the Italian delegation in the Horizon Europe Programme Committee for Cluster 4. She is author of over 180 papers for international journals and conferences, has won numerous awards and projects, and is involved in major strategic initiatives on AI at national and European level.

Michela Milano

Interview with Michela Milano, Professor at the University of Bologna, where she directs the Alma Mater Research Institute on Human-Centred Artificial Intelligence (ALMA AI) Interdepartmental Centre

How did ALMA AI come about, and with what objectives?

ALMA AI is an interdepartmental centre involving 28 departments of the University of Bologna and over 520 researchers and lecturers, in addition to a large number of PhD students working in this field. It was established in 2020, with the aim of building a critical mass capable of working comprehensively on topics related to artificial intelligence. Specifically, we set ourselves the challenge of addressing artificial intelligence, within a generalist university such as ours, not only in terms of technological and scientific developments, but also in its strong connection with applications in medicine, agriculture, mechatronics, aerospace, and with the humanities – from social sciences to economics, from law to ethics, to psychology. On the one hand, we wanted to analyse the impact of these technologies on society, law, market, and on the other, to contribute to the development of AI models that take into account human dynamics and complexities.

Read the interview Read the interview- 26/09-10/12

- 6-11 November

- 21-23 November

- 23 NovemberMalnisio (PN)Malnisio Science FestivalMalnisio Science Festival

LIGO, Virgo and KAGRA observe 'second generation' black holes for the first time

New results on the Majorana neutrino thanks to a “noise-cancelling” algorithm

Particle Chronicle © 2025 INFN

EDITORIAL BOARD

Coordinator Martina Galli;

Project and contents Martina Bologna, Cecilia Collà Ruvolo, Eleonora Cossi,

Francesca Mazzotta, Antonella Varaschin;

Design and mailing coordinator Francesca Cuicchio; ICT service SSNN INFN